AERA-NSF Workshop: Data Sharing and Research Transparency (Part II)

By Bodong Chen in blog

July 27, 2017

Story continued, after Part I.

In the 2.5 days of the workshop, the group continued to deepen the discussion on Data Sharing to more concrete and practical items. In Part II of my personal reflection, I summarize key Data Sharing resources/initiatives to be aware of, possible action items, and some personal random thoughts on future directions.

The 3-day workshop on "Data Sharing and Research Transparency at the Article Publishing Stage" comes to a close today in D.C. pic.twitter.com/XpXIKp459g

— AERA (@AERA_EdResearch) July 27, 2017

Data Sharing in Education Science: The Status Quo

On the second day of the workshop, scholars working at the frontiers of data sharing and open science presented their insights and experiences, together with officers from funding agencies (IES, NSF, NIH) and journal editors.

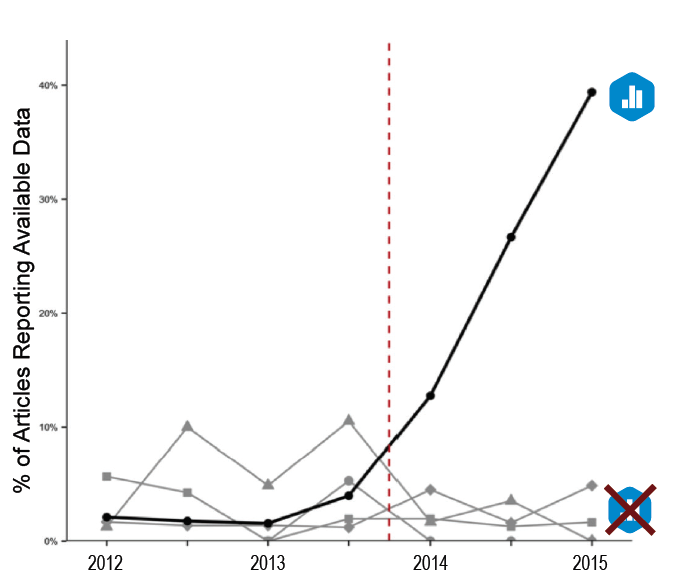

Skip Lupia (UMich), a political scientist and the Chairman of the Board of the Center for Open Science, gave a talk about Why Share, Why Now, What for?. He helped us unpacking two important criteria of scientific research: credibility and legitimacy. Equally helpful was a recognition that transparency encompasses production transparency (the production of data) and analytic transparency (how a scholar goes from data to knowledge claims). He shared important resources from the DA-RT: Data Access and Research Transparency initiative and its TOP Guidelines, supported by 29 top journals across disciplines. He shared preliminary evidence of “small” things like the CoS Badges can effectively spur the adoption of data sharing & research transparency practices. Finally, it was so refreshing to hear that those data sharing initiatives have been and are being championed by qualitative researchers. Data sharing is indeed doable for qualitative researchers and how qualitative researchers perceive data (and I guess more specifically their ontological and epistemological commitments) are of great value to data sharing practices. This is a very important and mindbolging message that I did not expect before this workshop.

Three recognized data sharing repositories — Inter‐university Consortium for Political and Social Research (ICPSR) (UMich), Databrary (PSU & NYU), and Qualitative Data Repository (QDR) (Syracuse) — and their leaders/PIs presented as a panel. It was encouraging to see: 1) ICPSR’s long record of data sharing/archiving since 1962; 2) QDR as a repository focusing on qualitative data; and 3) “even” research videos are also sharable on Databrary. AERA is partnering with ICPSR to set up a dedicated repository for AERA journals, in line with AERA’s forthcoming policies on data sharing. Important questions were asked about possible impact on international researchers — and non- WEIRED populations — who are automatically excluded by some data repositories’ requirements of IRB approvals that are nonexistent in some countries/regions. For me, it was encouraging to learn existing templates and guidelines made available by data sharing repositories that I could refactor for IRB protocols of my projects. Together with emerging policies made by funding agencies, professional associations, and journals, I can feel more confident about pursuing data sharing (as much as possible) when proposing a study.

It was exiting to hear from Maggie Levenstein (Director of ICPSR) about work on researcher credential building blocks that’s under way. Their framework of credentials includes a researcher’s status (incl. academic, geographic, demographics), affiliation, training (e.g., in data sharing), access level, prior contributions, and violations of data stewardship. While companies like ResearchGate are inventing scores to determine researchers’ “status,” why not build a non-proprietary system to enable positive feedback loop for data sharing? A researcher credentialing system with an emphasis on data sharing could raise awareness of data sharing, give credits to those who share, and maintain reputation of data users. Exciting stuff!

Of course, there are still so many issues to be sorted out, especially from an editor’s standpoint, who may constantly face work overload and not wish to add another layer to a journal’s production pipeline. Extremely legitimate. As an early career scholar, I’m quite blind to many challenges an editor or an association’s president might be thinking about. Fortunately colleagues running data repositories may have a lot to offer.

It was helpful to hear from Lupia on the third day about multiple levels and multiple dimensions a journal can consider when implementing data sharing practices. Check out this one-pager explaining 8 modular standards and 3 levels. From the lowest level, for example, a journal could encourage — instead of requiring — authors to put data into a repository. In terms of dimensions, a journal could consider change of citation practices to reinforce secondary datasets being cited, require preregistration of (causal-inferential) research, encourage replication studies, nurture analytic transparency by asking authors to share code, and so forth. As our JLS co-editor-in-chief Susan Yoon nicely worded, “it seems the train has already departed, and we need to catch it.” I was thinking about a slightly different metaphor based on my experiences playing “Real-Time Strategy Games”: there are multiple pathways towards winning a game (e.g., defeating an opponent), and doing one thing (such as collecting a lot of lamber) may unlock other components of winning; further, in a game that requires teamwork, one player (e.g., a journal editor) could focus on one component (e.g., collecting lamber) and share to teammates, while other teammates (e.g., community advocates, data repositories) could handle other parts. We should not drain an editorial team’s limited resources to achieve everything overnight; rather, maybe we could create a roadmap that involves multiple types of contributors — authors, editors, data sharing services, funders, and maybe also publishers (for-profit or not).

Funding agencies have been actively looking into data sharing. For instance, discussions on data and data stewardship are central to NSF Programs such as smart and connected communities and Internet of Things. NIH has 71 data repositories and is very active in Genomic data sharing. To a great extent, broader impacts sought by funding agencies could become more achievable if research data — collected with support from tax-payer dollars — are not sitting in one team’s private server for 10 years but instead shared carefully, ethically, and with proper restrictions.

Like a colleague nicely said:

Data sharing is not a thing in addition to doing science. Data sharing is doing science.

Action Items

A collective resolution has been reached near the end of the workshop and is likely to be shared by AERA soon. Below are action items that seem to me thinkable — if not yet feasible — for different players.

(Disclaimer: These items are in many cases my personal takeaways and do not represent official policies from funding agencies or professional associations. Some ideas are based on group discussion but may not accurately reflect what was originally said.)

Individual researchers. Study issues related to research transparency, reproducibility, replicability, etc. Get familiar with terms like p-hacking, preregistration, reproducible research, preprints, Research Objects, etc. Take a hard look at our own research workflows and think about potential transparency/reproducibility hazards — which could be related to how we name files, store research memos, document R codes, backup data, etc. Stay current with existing data sharing guidelines from funders, journals, and research societies — as the train has ignited its engine if not yet departed. Seek support from our institutions, libraries, data stewards, etc. For those ahead of the game, publish your codes in Github, package algorithms in an R package, etc.

A very important point made by Robyn Read (an early career peer & also an OISE alumnus): academia has been quite intolerant of failures and mistakes. We get afraid of missteps at every stage of a research project. How about making peer-review more collaborative? How about putting drafts/preprints out there early on for open discussions (see this paper originally shared as a draft)? There are certain norms in academia possibly ripe for disruption (a word I typically don’t like using).

Faculty members. Engage students in conversations, in our labs or methodology courses. Point them to training resources on campus or online (webinars, MOOCs, manuals). Help them stay calm, even when we aren’t :-). Maybe even develop new courses about research transparency and reproducible research — typically nonexistent in colleges of Education.

Reproducibility Guide from the R and rOpenSci communities https://t.co/e7DA5PP16h #DataSharing #openscience

— Bodong Chen (@bod0ng) July 26, 2017

Professional associations and their journals. Convey messages to association members and share exemplars. Expect non-supportive reactions and resistance. Treat resistance as good opportunities of conversations, as a colleague said. Engage members — esp. international members — in conversations about data sharing at board meetings, conferences, webinars, doctoral consortia, etc. Talk with other journals under a same society, journals in a same field by “reside” somewhere else, and journals from other fields. Identify early-starters of data sharing and engage them in training and conversations. Maybe consider a new award category for data sharing or research transparency/reproducibility (suggested by Skip).

Data repositories. I look forward to digging into datasets already living in those repositories and studying their resources for IRB preparation. For example, to me knowing data sharing is not the same as making data totally open to anyone was helpful (see the tweet below). It would be very useful if data repositories could further provide dataset exemplars. A 3-minute video walkthrough about the process of depositing a dataset will also help. These resources would help us get a more concrete understanding of data sharing.

One example ICPSR dataset showing ways to protect data: the words #DataSharing sound scary but there are strategies https://t.co/MfZqqUSWxf pic.twitter.com/FUpfc3SZNh

— Bodong Chen (@bod0ng) July 26, 2017

Universities and research institutions. IRB practices are evolving as well. My institution, the University of Minnesota, hosted IRB Town Halls last academic year discussing the changing landscape of Compliance & Ethics. Engage IRB offices in data sharing conversations, if not yet. Also important is a university’s willingness to back up its researchers’ ethical data sharing practices and stand with them against possible vicious attacks. Just like many universities are backing up their faculty members’ fair-use claims.

Towards My Dream World

This workshop was so helpful in getting me to think more deeply about data sharing and possible actions I can take as a researcher, faculty member, new investigator, author, international person whose home country’s ethical review practice is still emerging.

Below I explore a few other components touched upon (implicitly) during the workshop. I am quite excited about them even though they are still vague and paradoxical to me, or are totally against our current norms and routines. So I’m now throwing them out there for further discussions.

Control. Before attending the workshop, I thought data sharing is to put data out there — totally public and totally beyond one’s control after data get shared. That is a scary scene for many of us. As researchers, we can still remain a certain level of control over a shared dataset — not based on whether we like a data requester or whether a requested usage contradicts our published findings.. But it seems controls pre- and post-sharing are areas that could be further discussed.

Here I’m especially curious about possible controls after data sharing (post-sharing). Linked to work on researcher credentials, data repositories could have an opportunity to think twice about a data request from someone with many previous violations. For data sharers, they may wish to be able to track who are accessing my shared data, how, and where results are published; so are data repositories.

Related to post-sharing controls, we also image a shared dataset to keep evolving after secondary analysts invest time to recode, reshape, or link them. When we allow a new version of a dataset to appear? Or do we allow a dataset to fork? Who can make these judgements? Intriguing questions.

How about research participants? While our currently practices seek clearcut delimiters of data ownership/stewardship in a lifecycle of project. For example, when an informed consent is made, we say data ownership is transferred from a participant to a researcher; similarly, when a dataset gets submitted to shared to a repository after de-identification, we see another change of data ownership and stewardship. However, in a deeply connected world, should research participants remain control of their own data? Would it be more ethical if participants have the choice to stay aware of work conducted on data they contributed — while remaining hidden from data users? This may seem totally quite counter-intuitive. But see yourself the Kavli HUMAN project, the shift from an Internet of Information to an Internet of Actions, and a move to store medical records on blockchains.

Ubiquitous networking has transformed cyberspace into a pervasive layer atop our physical reality. ( source)

What’re the implications on research data? What does it mean to data sharing?

Linked data and maintained contexts. Information loss accompanies the lifecycle of research data – from collection, cleansing, transformation, analysis, synthesis, publication, to meta-analysis… We are increasingly losing the context where data were originally collected. In a “smart” connected world, as described above, how about data sharing is not too distant from where data originally reside? Data sharing is just like adding faces and masks to data and selectively expose data to secondary users? In my experiences with secondary data, I saw data shared in forms PDFs, CSVs, Excels, JSONs, and XMLs. How about we say those data formats or shapes all suck, and we wish to (safely & ethically) maintain possible connectivity when sharing data instead of cutting connections?

Broader conceptions of value and incentives. I shared an idea in a breakout group discussion about crediting data sharers with a crypto- or digital currency, with which they can “purchase” other data. Like the Ethereum blockchain, data sharers share data with “smart contracts” attached to them and users “pay” a price in a digital currency based on their status (e.g. free for a student or a researcher without funding, a fair amount for someone having funding, expensive for someone with many prior violations). One colleague said this “monetization” was against the spirit of data sharing. Probably. But is it?

My intention of mentioning digital currencies was simply to recognize value generated by research work. For data sharing, if citations to a shared dataset with DOIs provide an “alternate currency” worth considering for tenure promotion. What else mechanisms could be created to promote and facilitate data sharing and — research integrity, in general? I would argue citations might be too narrow, and not enabling enough. My citation count may signal my prior achievements and help me get funded. But how many grant proposals can I possibly write each year? And a citation count does not represent my reputation in research collaboration, data sharing, peer review, conference organization, etc. As academics, what values do we generate from our work? How to give ourselves credit (not until once in a lifetime tenure promotions)? Crazy? Maybe. But academia is outsourcing — if not losing — values generated by us to others – for-profit publishers, data-rich startups, etc. See the fight for Open Access against the “paywall”, sometimes in dramatic unlawful manners. See our love-hate relationship with the Impact Factor or other algorithms like h-index that influence who get jobs, tenure, and grants. We can stay proud of those nicely formatted PDFs published in high Impact-Factor journals. But what other things can we care more about? If we’re not standing with various types of value generated by our work — during data collect, data analysis, peer review, journal editing, etc. — it’s probably a matter of time another startup or rich company to claim ownership of a piece of our work.

If ten years later learning is earning (see the video below), I predict data sharing, and other value generation or value sharing activities in scholarly research, will also be recognized — not necessarily with money but with something that can more holistically represent the value of our work and can be used/transacted to enable further scholarly activities.

Finally, my heartfelt thanks to our leaders — esp. Dr. Felice levine from AERA and Drs. Susan Yoon, Jan van Aalst & Bill Sandoval from ISLS — in engaging me in this exciting and rewarding journey. I look forward to working further on data sharing and in various inter-related areas.

- Posted on:

- July 27, 2017

- Length:

- 13 minute read, 2672 words

- Categories:

- blog

- Tags:

- open science data sharing